In this article, we will see how to create a database in SQL Server. We will also explore various options while creating a database.

Creating SQL Server databases can be achieved by two different ways as explained below -

1. You can make use of SQL Server Management Studio (SSMS) and Create Database wizard to create the database.

2. You can create the database programmatically by writing scripts.

If you are using the database wizard for creating the database, you will have limited options while creating your database. Most developers and administrators prefer to create the databases programmatically by writing scripts. These scripts can then be easily deployed on other SQL Server instances where you want to create the same database with the same configurations.

In this article, we will explore some configuration options as described below -

- Create database using database wizard

- Create database using script

- Create database with files and file groups

- Configuring File Stream during database creation

- Configuring recovery model during database creation

- Creating database with Collation option

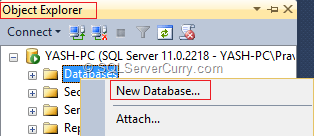

Create database using database wizard

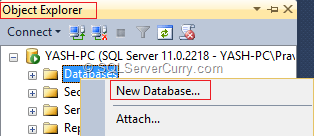

To start with, we will opt for SQL Server Management Studio [SSMS]. Once you open SSMS, in an Object Explorer window, we will right click the Databases folder and create a new database as shown below -

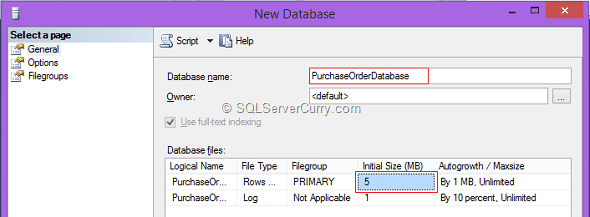

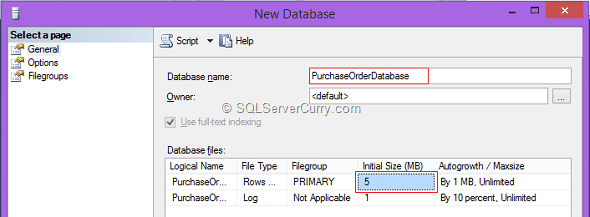

This step will show you a New Database window. Fill the required details as shown below -

You can give a database name and set the owner of the database. Also look at the database files. Check out the initial size of the database. In my case, it is 5 MB. The question is where is this size coming from?

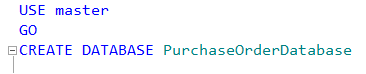

Creating simple database using script

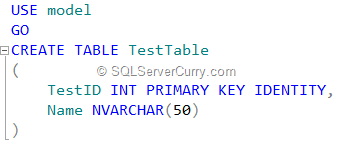

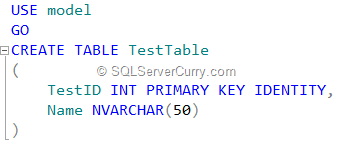

In SQL Server the "Model" [System Database] acts as a template for all the databases. Whatever the settings of Model, the same settings are applied to your new database. For example, let's add a simple table to our Model database and then create a PurchaseOrderDatabase. The table script is as shown below -

Note: All these scripts shown in this article can be

downloaded from here

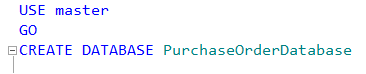

After creation of the table, create a database as shown in below script -

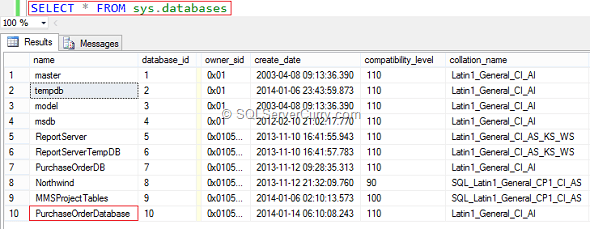

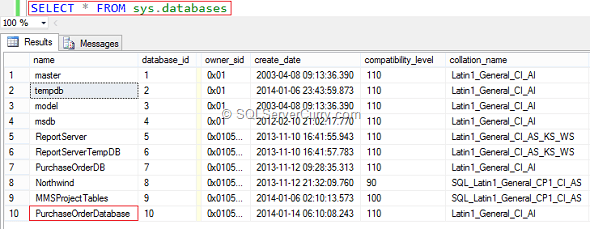

After creating the database, let's see how to select all the available databases under a given SQL Server instance. You can find the same under a

view available under master database -

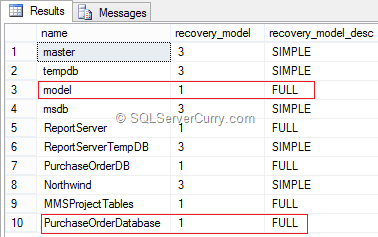

sys.databases. The output of the sys.databases is as shown below -

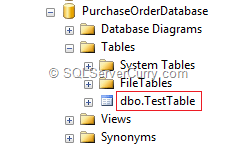

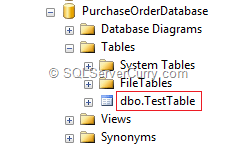

You can now expand the PurchaseOrderDatabase using Object Explorer and check the tables folder. You will find "TestTable" created under Model database.

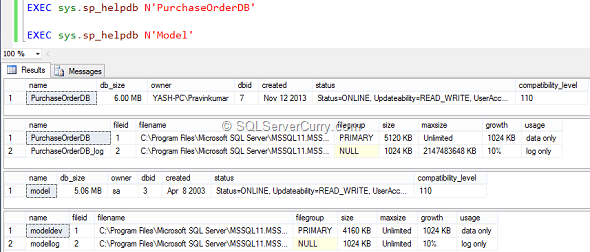

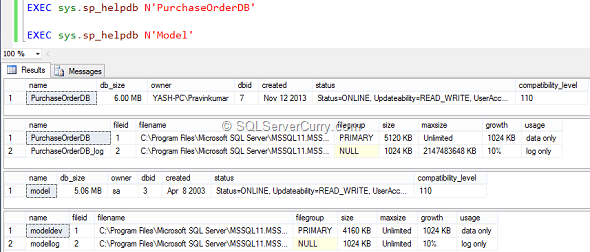

This table got copied into our PurchaseOrderDatabase, as Model works as a template for the custom databases. You can also make use of 'sp_helpdb' stored procedure as shown below -

The result of sp_helpdb stored procedure gives the output shown as above. It shows the information about the database as well as the files created for the database for both PurchaseOrderDatabase as well as Model database. So that’s about the Model database!

Create database with files and file groups

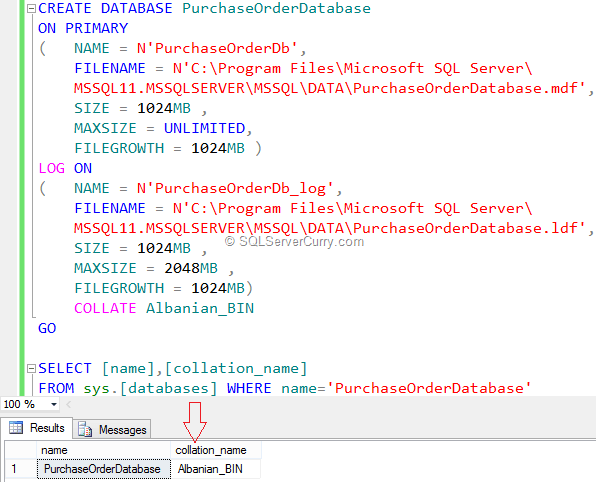

While writing the Create Database script in our query, we did not mention any other options. We will explore some options that can be used while creating the database. Let's first drop the PurchaseOrderDatabase so that we can create the same database with different options.

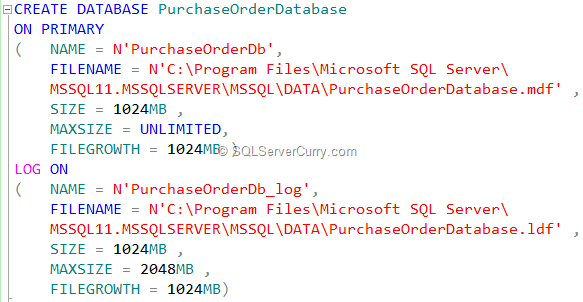

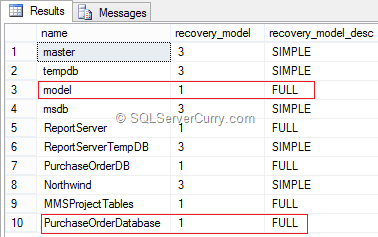

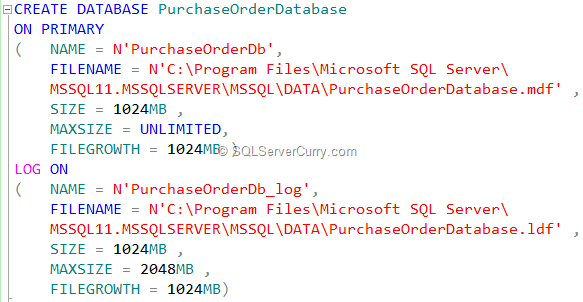

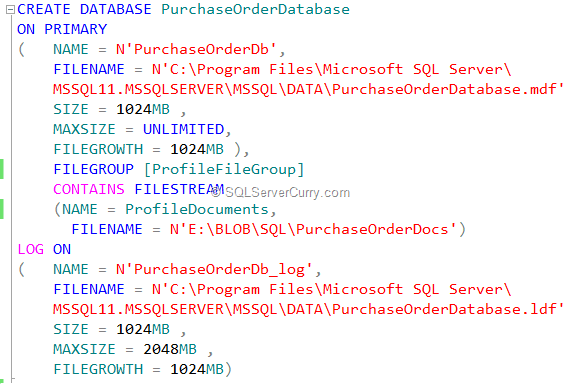

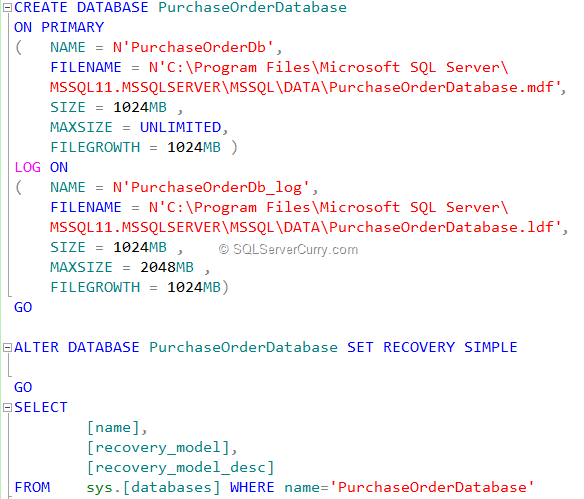

We will create the database using data files and file group options. Let's write the script as shown below to create a database PurchaseOrderDatabase -

When you create a database, you have to provide at least two files - Primary file and Transaction Log File. Also you have to specify at least one file group while creating the database. In the above script, we have specified the size of the primary data file, maximum size of the database and file growth in Mega bytes. You can specify the size of the file in KB, MB, GB or TB. The default is MB.

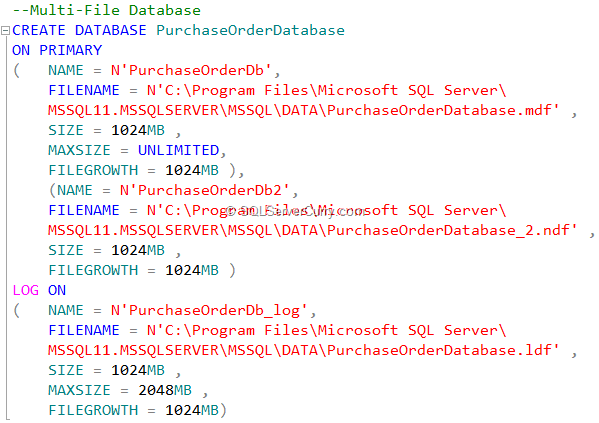

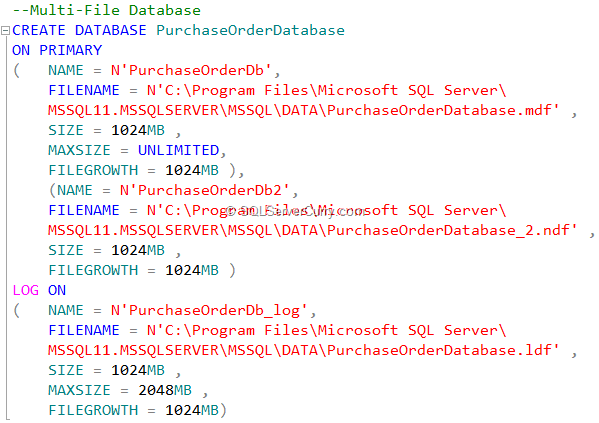

You can also create the database using multiple data files with the extension .ndf which are also known as secondary data files as shown below -

Configuring File Stream during database creation

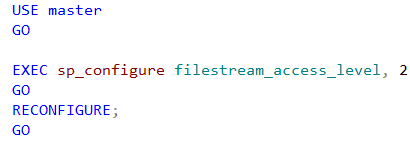

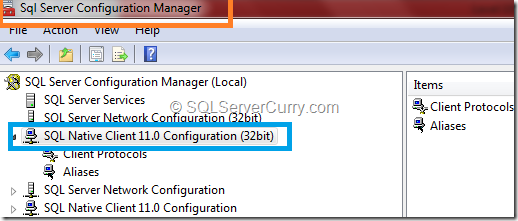

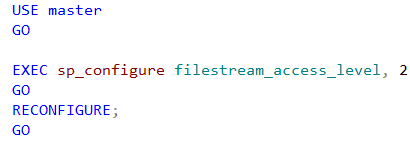

Now let's create a database with File stream. File Stream is used to store unstructured data like documents and images on NTFS file system with the help of VARBINARY(MAX) type. By default, the file stream is not enabled on SQL Server. To enable the file stream access level on SQL Server, write the following command -

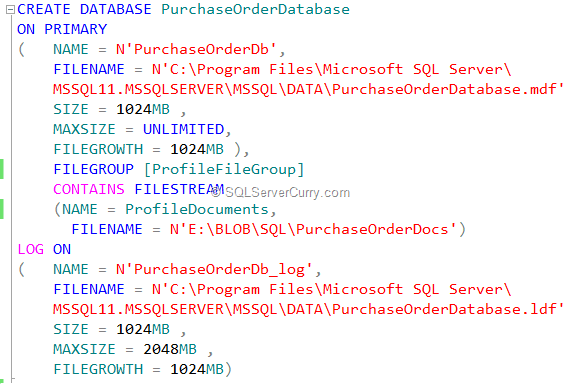

After enabling the filestream access level, let's create a database with the file stream option as shown below -

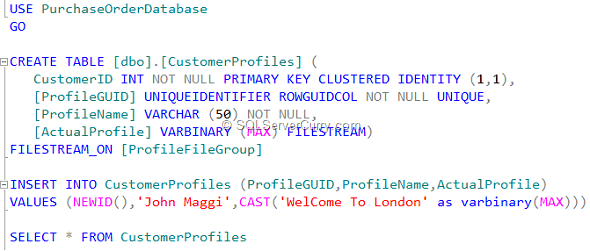

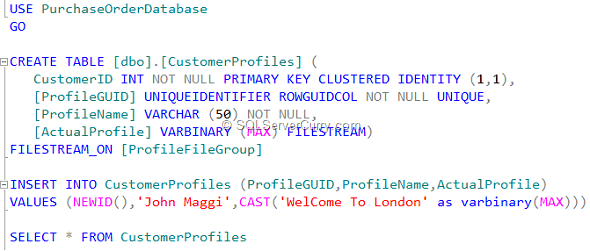

To test the file stream database, we will create a table with File Stream and will try to insert some data in the same, as shown below -

While creating the database, you can specify the database recovery model. There are three options which you can avail for recovery model -

1.

Simple - In this recovery model, there is no log backup. Also you cannot use Log shipping or point-in-time restores.

2.

Full - In this recovery model, all backup types are permitted. Point-in-time recovery is possible.

3.

Bulk Logged - In this recovery model, all backup types are permitted. No point-in-time recovery is supported. Recovery is possible at the end of any backup.

Configuring recovery model during database creation

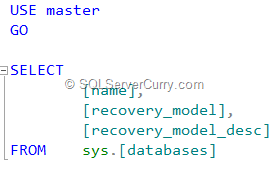

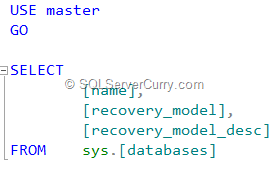

We will now create a database with recovery options. But before that, we will see what is the default recovery option set on our database. For this, we will again make use of sys.databases system view as shown below -

The output is as shown below -

Below is a script for database creation with the recovery options -

Creating database with Collation option

Finally, we have the last option to explore, i.e. create a database with collation option. SQL Server supports number of Collations.

Many cultures around the world use different character sets. Simply put, a collation encodes the rules that govern the proper use of characters for an alphabet or language.

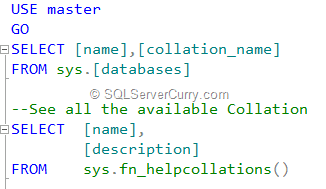

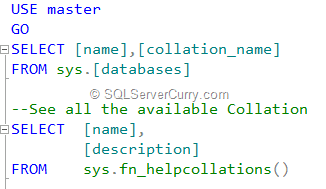

Let's create a database with our choice of Collation. Before that we will also see what is the default collation set on our databases as well as all the available Collation with the help of

sys.fn_helpcollations(). The queries are as below -

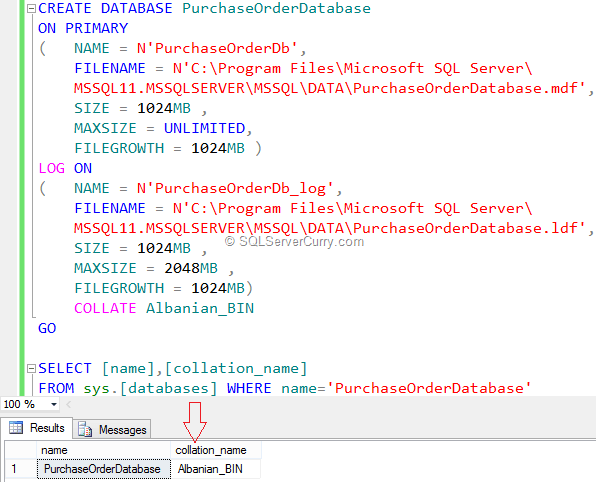

The query for creating a database with the different collations is as shown below -

And that’s it!!

Conclusion

In this article, we have seen how to create a database in SQL Server using a wizard and by writing scripts using SQL Server Management Studio [SSMS] with various options like files and file groups, with file stream option, with recovery model and with collation option.

The scripts shown in this article can be

downloaded from here

![clip_image001[6] clip_image001[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgH5mpeHe0IK7K09aXP1x1_iGc_SHDtLw8igv_UR6n_lTMIsq6WqtUTemdj9FgG55ge9jOW8-7E7V7YP1rGDFxczGG9Sv0fFvaz3-Jfa8N-RpWhQm5NK6YMz3v0Aia2McrmoBq3wbms4w1w/?imgmax=800)

![clip_image002[10] clip_image002[10]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjNX0uW9-QjhzzxFjmPQPc2ukFGKV2cFOKKyYEnzEmSOfv2Zwz391bxNQCPfNGVzYYIpcnP6jN-DGYoNlOwZKy4V0kRnwi69H15c-237yoJ0Ldlx-Mi-najQ52ZN0OhXrZhj7MyFH3rwRJm/?imgmax=800)